Course Summary

Machine learning (ML) is increasingly being used in several real-world applications. However, research has shown that ML models can be highly vulnerable to adversarial examples, which are input instances that are intentionally designed to fool a model into producing incorrect predictions. The goal of this course is to demonstrate ML vulnerabilities and develop secure AI in various domains, with MLsploit.

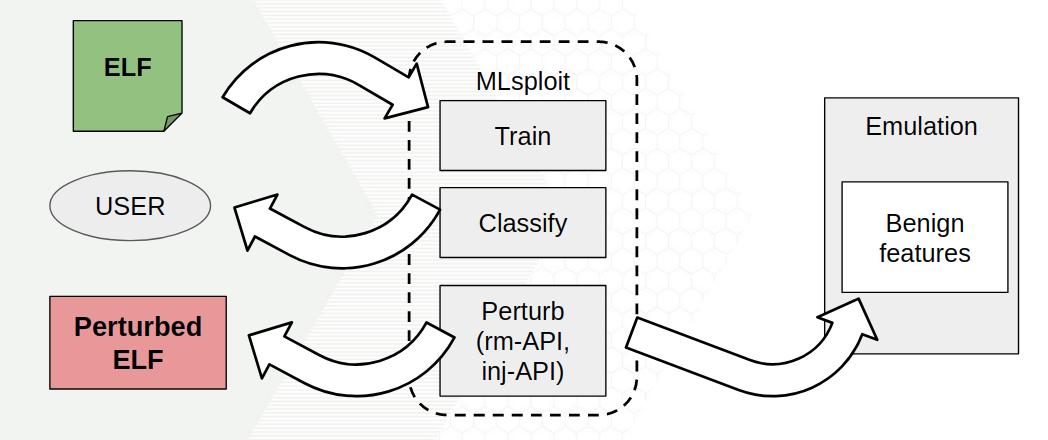

MLsploit is an ML evaluation and fortification framework designed for education and research. It focuses on ML security related techniques in adversarial settings, such as adversarial creation, detection, and countermeasure. It consists of plug-able modules which could demonstrate various security-related research topics.

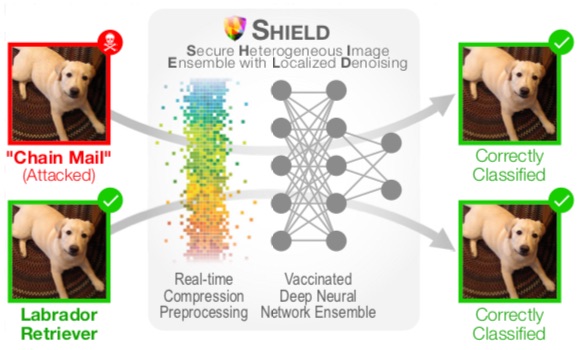

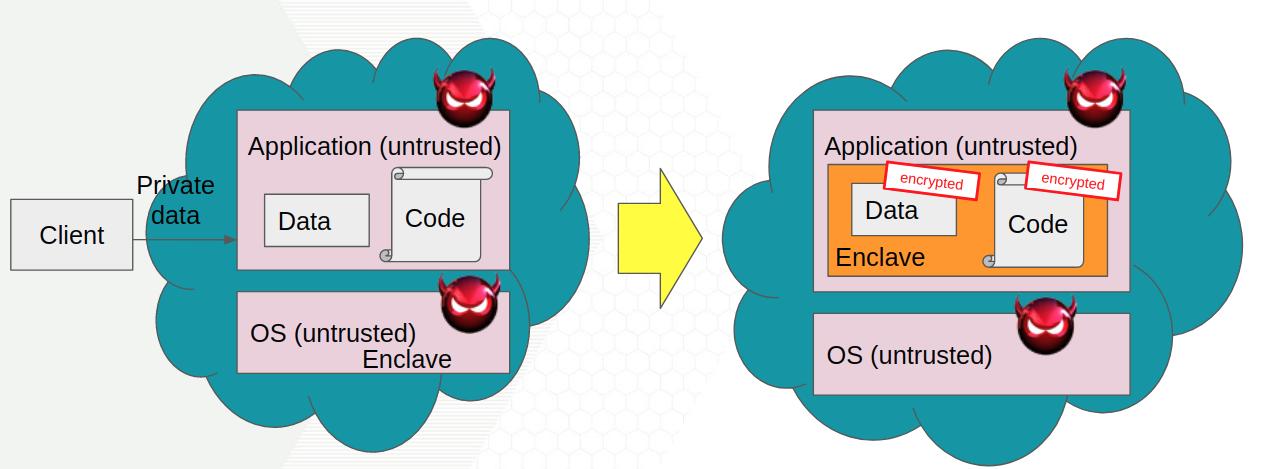

Several built-in modules in MLsploit will be shown in this course, including defense in the image domain (SHIELD), malware detection and bypassing (AVPass, ELF, Barnum), and the application of Intel SGX for privacy-preserving and inference-preventing ML.

To get started, head over to the

MLsploit REST API,

MLsploit Execution Backend and

MLsploit Web UI

repositories to set up MLsploit, and then check out the various MLsploit modules below.